Designed for Today’s LLM Use Cases.

Engineered for What Comes Next.

solution 1

Consumer-Facing AI

Safety

For travel, e-commerce, and consumer apps using LLM chatbots, brand trust hinges on every word. Cyvia helps product and security teams ensure chatbots remain attack-free, preempt unsafe or off-brand responses, test for edge cases, and review conversations at scale.

Red-teaming tools to simulate adversarial inputs

Configurable allow & block logic for sensitive topics or PII.

Output safety tagging like hallucination, over-disclosure, risky links.

solution 2

Developer & Internal

LLM Governance

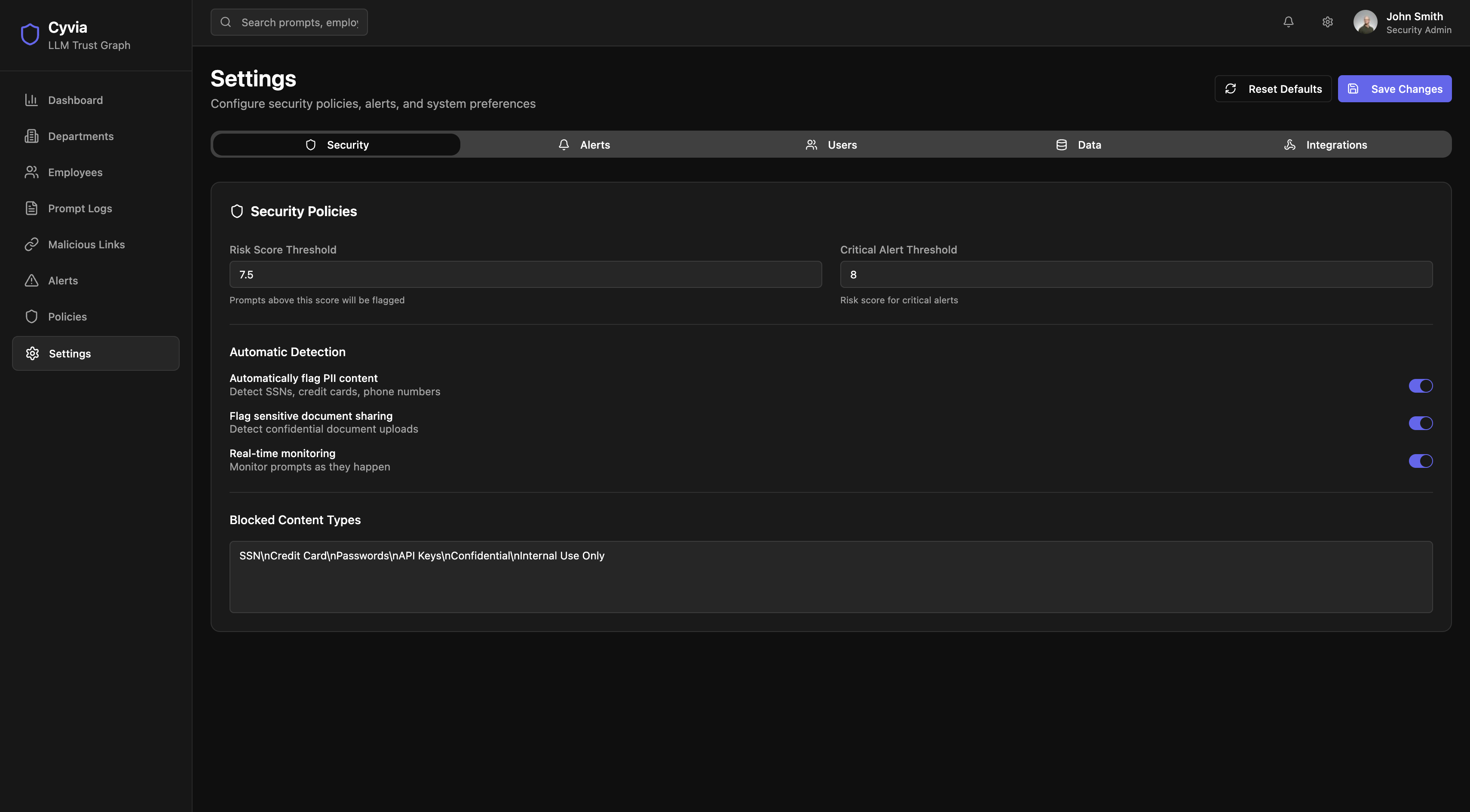

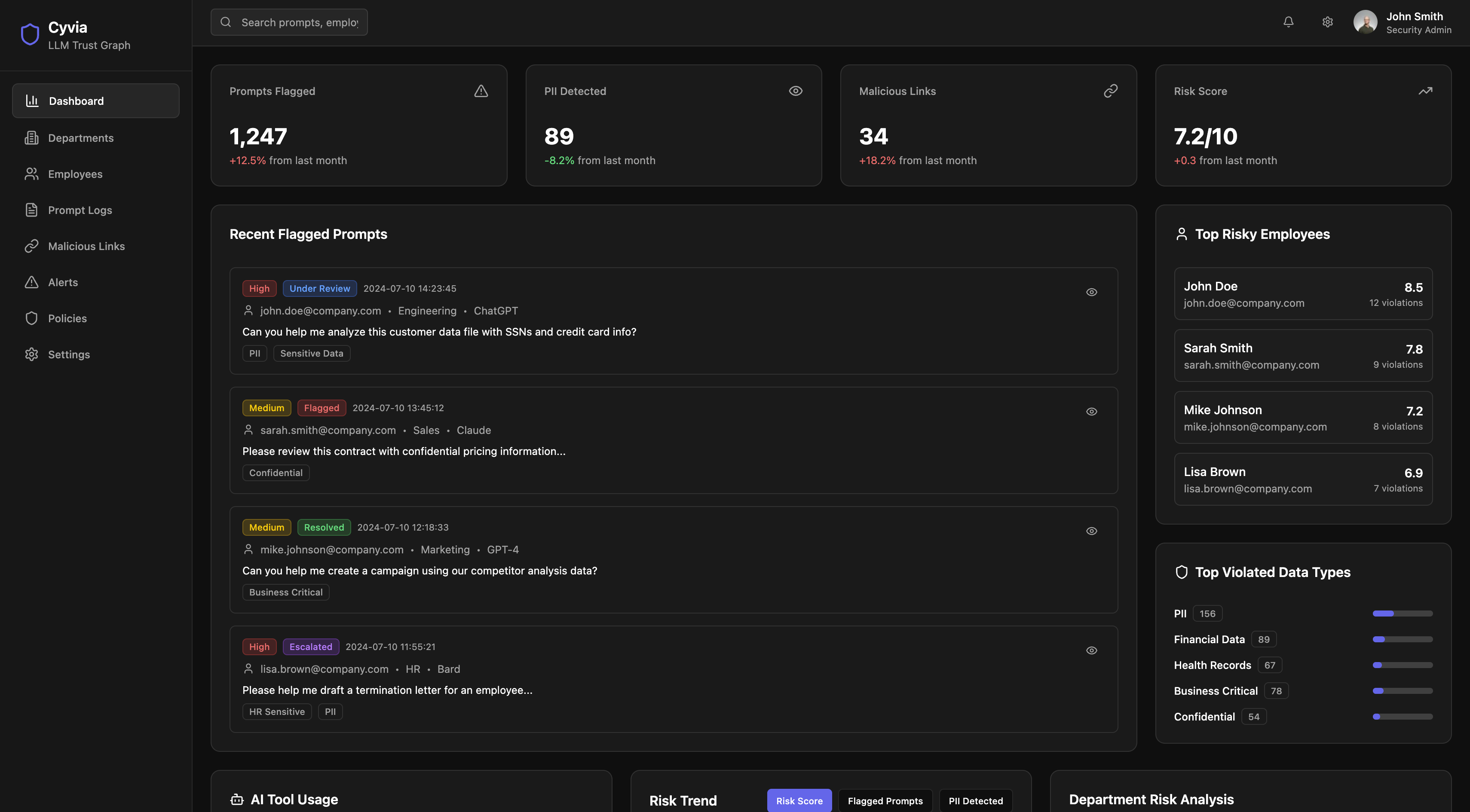

Your developers are using models to write code, answer queries, and summarize data. Cyvia ensures these LLM tools aren’t inadvertently leaking secrets or creating risky outputs inside your enterprise.

Real-time prompt-response monitoring for dev tools

Detection of API misuse, unintended code generation, or IP leakage

Role-based insights into who asked what and why

solution 3

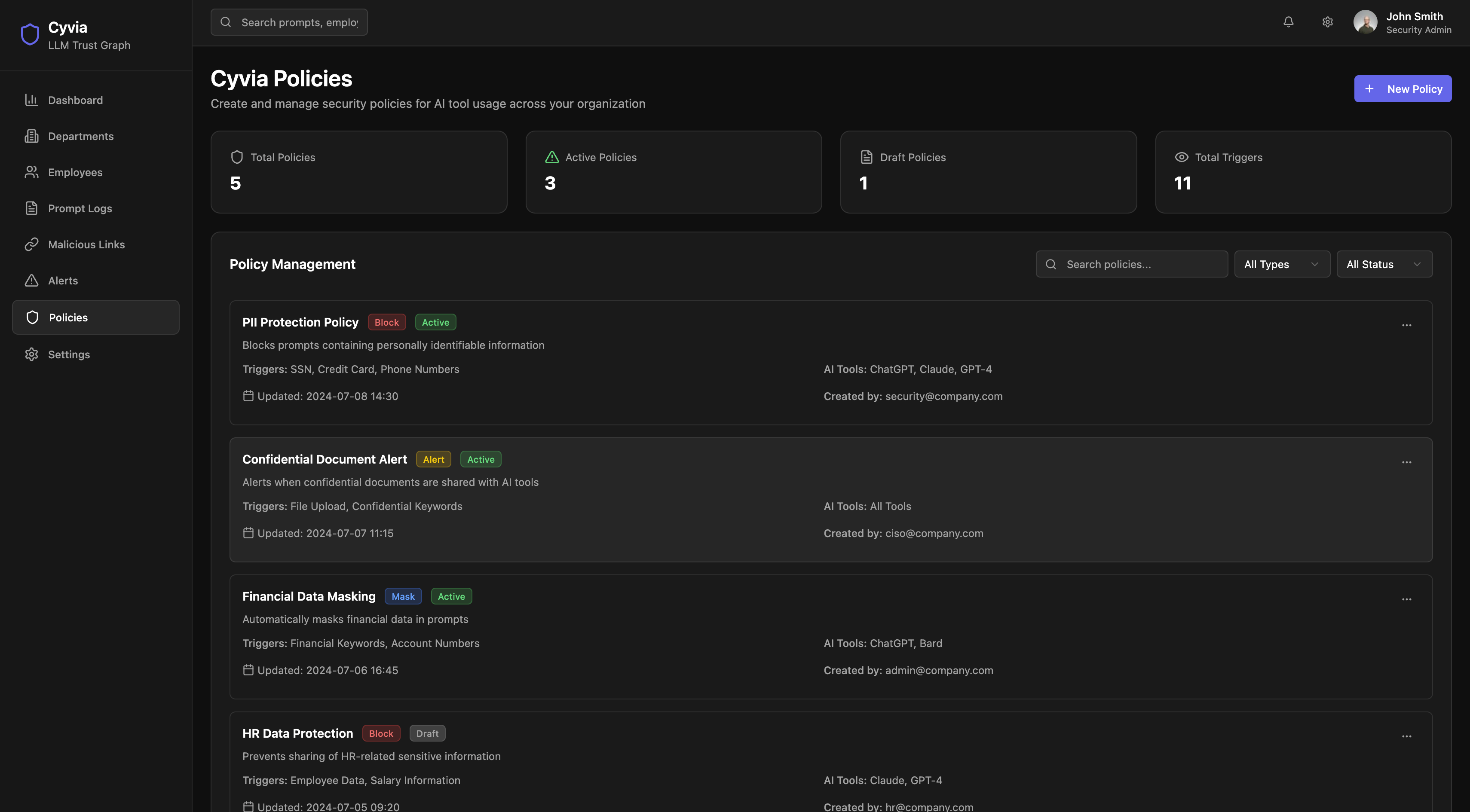

Compliance with AI-Driven Workflows

Regulators are catching up to AI use. Cyvia makes sure you’re ahead—by recording, analyzing, and classifying every LLM exchange with context, risk level, and business impact in view.

Semantic labeling aligned to data governance policies

Exportable logs for audits or AI incident response

Custom policies by region, role, or use-case

solution 4

AI Product Observability for Builders

Building an AI feature into your app? Cyvia gives product and ML teams the lens they need to observe live model behavior, test in staging, and safely iterate without shipping risky interactions.

Drop-in API hooks for dev/staging/test environments

Semantic diffing of model responses across versions

Prompt-replay and response feedback loop tooling

Ready to make your LLMs trustworthy

by design?

Get Started With Cyvia

Support & Enquiry

hello@cyvia.ai

+917760985188

Designed for Today’s LLM Use Cases. Engineered for What Comes Next.

solution 1

Consumer-Facing AI Safety

For travel, e-commerce, and consumer apps using LLM chatbots, brand trust hinges on every word. Cyvia helps product and security teams ensure chatbots remain attack-free, preempt unsafe or off-brand responses, test for edge cases, and review conversations at scale.

Red-teaming tools to simulate adversarial inputs

Configurable allow & block logic for sensitive topics or PII.

Output safety tagging like hallucination, over disclosure, risky links.

solution 2

Developer & Internal LLM Governance

Your developers are using models to write code, answer queries, and summarize data. Cyvia ensures these LLM tools aren’t inadvertently leaking secrets or creating risky outputs inside your enterprise.

Detects API misuse, unintended code, or IP leaks

Real-time prompt-response monitoring for dev tools

Role-based insights into who asked what and why

solution 3

Compliance with AI-Driven Workflows

Regulators are catching up to AI use. Cyvia makes sure you’re ahead—by recording, analyzing, and classifying every LLM exchange with context, risk level, and business impact in view.

Exportable logs for audits or AI incident response

Custom policies

by region, role, or use-case

solution 4

AI Product Observability for Builders

Building an AI feature into your app? Cyvia gives product and ML teams the lens they need to observe live model behavior, test in staging, and safely iterate without shipping risky interactions.

Drop-in API hooks for dev/staging/test environments

Semantic diffing of

model responses across versions

Prompt-replay and response feedback loop tooling

Ready to make your LLMs trustworthy

by design?

Get Started With Cyvia

Support & Enquiry

hello@cyvia.ai

+917760985188

Designed for Today’s LLM Use Cases.

Engineered for What Comes Next.

solution 1

Consumer-Facing AI Safety

For travel, e-commerce, and consumer apps using LLM chatbots, brand trust hinges on every word. Cyvia helps product and security teams ensure chatbots remain attack-free, preempt unsafe or off-brand responses, test for edge cases, and review conversations at scale.

Red-teaming tools to simulate adversarial inputs

Configurable allow & block logic for sensitive topics or PII.

Output safety tagging like hallucination, over-disclosure, risky links.

solution 2

Developer & Internal LLM Governance

Your developers are using models to write code, answer queries, and summarize data. Cyvia ensures these LLM tools aren’t inadvertently leaking secrets or creating risky outputs inside your enterprise.

Real-time prompt-response monitoring for dev tools

Detection of API misuse, unintended code generation, or IP leakage

Role-based insights into who asked what and why

solution 3

Compliance with AI-Driven Workflows

Regulators are catching up to AI use. Cyvia makes sure you’re ahead—by recording, analyzing, and classifying every LLM exchange with context, risk level, and business impact in view.

Semantic labeling aligned to data governance policies

Exportable logs for audits or AI incident response

Custom policies by region, role, or use-case

solution 4

AI Product Observability for Builders

Building an AI feature into your app? Cyvia gives product and ML teams the lens they need to observe live model behavior, test in staging, and safely iterate without shipping risky interactions.

Drop-in API hooks for dev/staging/test environments

Semantic diffing of model responses across versions

Prompt-replay and response feedback loop tooling

Ready to make your LLMs trustworthy

by design?

Get Started With Cyvia

Support & Enquiry

hello@cyvia.ai

+917760985188